| MPEG NNR |

| Neural Network Compression and Representation Standard |

|

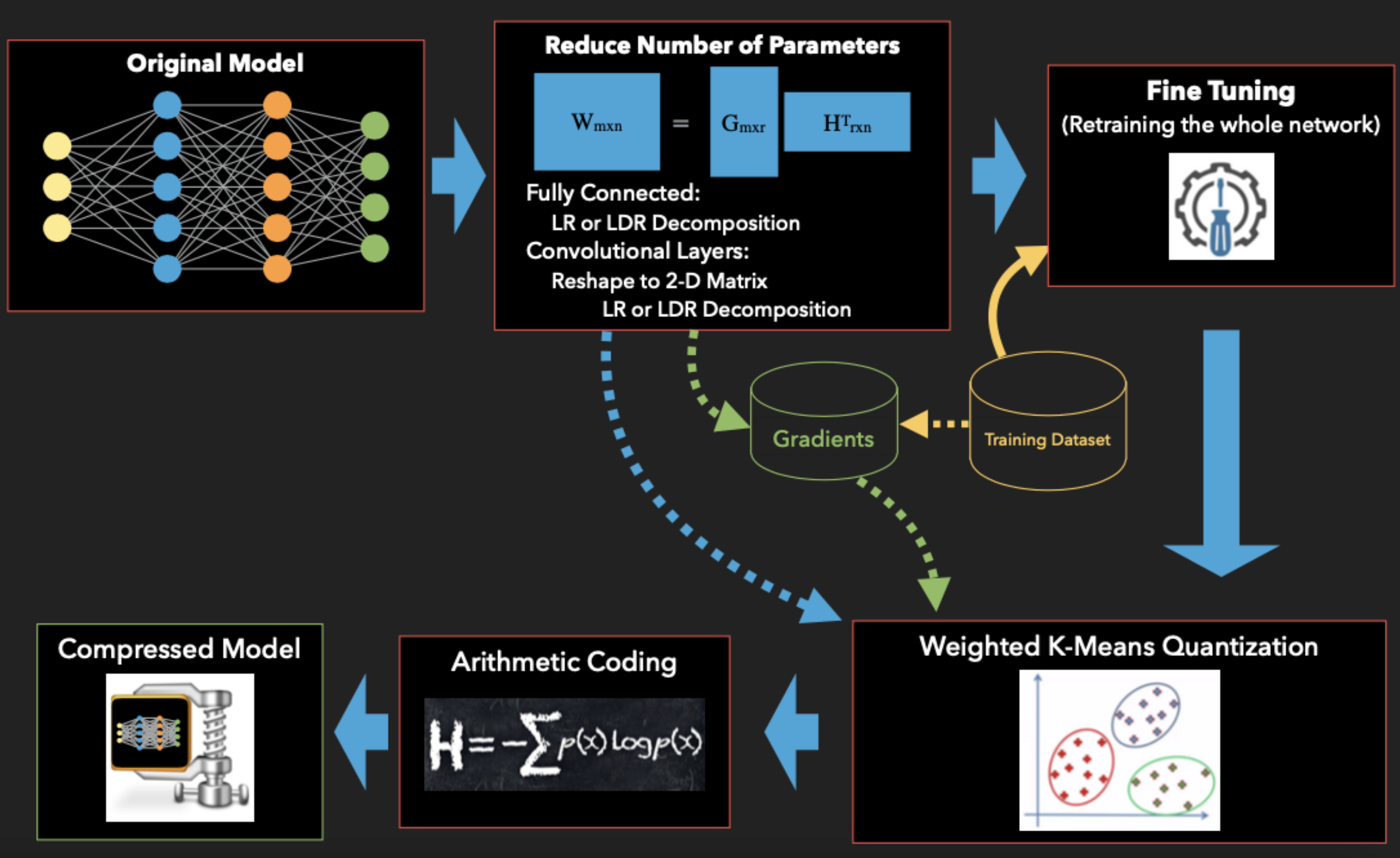

NNR is the first international standard by ISO/IEC Moving Picture Experts Group (MPEG) standardization working group for compression of Deep Neural Networks. It contains several compression methods that can be used individually or used together to form a compression pipeline. These methods include low-rank decomposition, sparsification, pruning, quantization, and arithmetic coding. For more information about these methods, please refer to this paper.

As a member of InterDigital's NNR team, I participated and contributed to some of the methods included in the standard. Specifically, the low-rank decomposition and codebook quantization methods have been implemented in the Fireball DNN library.

One of the most important features for both methods is the fact that they are back-propagation friendly. This means the model can be re-trained—or fine-tuned—after the compression. The fine-tuning process utilizes a subset of training samples to train the compressed model for a few (5 to 10) epochs.

The following diagram shows an example of a compression pipeline based on the MPEG NNR standard.